Test automation plays a significant part in ensuring continuous testing and maintaining the high quality of the tested product. In this article, we go over the main stages of automated testing for large projects, using one of our current projects as an example.

We’re working on a multi-component enterprise solution for monitoring client machines, detecting malware, and collecting artefacts for further analysis. Using this desktop application as an example, we’ll describe the key stages of automated testing on complex projects and determine the pros and cons of autotests. We’ll also provide a detailed description of our current autotesting framework so you can better understand what components your framework may consist of.

This article will be useful for quality assurance specialists and testers who want to learn more about mastering automated testing for a big project.

Contents:

The importance of autotesting

With more and more companies choosing the continuous delivery model of software development, running all tests at once at the end of the development cycle is no longer an option. Products are now tested in small pieces, feature by feature. And as these features get updated, some tests need to be repeated over and over again.

This is where automated tests come in handy. Usually, test automation is used at the quality control stage. Testers deploy various frameworks to prepare the test environment, run tests, and verify the results without any unnecessary human involvement.

Implementing autotests has both advantages and disadvantages.

The main benefit of large-scale test automation is that it allows you to accomplish multiple tasks:

- Reduce the overall product delivery time – By reducing the time QA specialists spend on manual tests, autotesting helps you deliver your product to end users faster.

- Automate repetitive tasks – Each project has a number of routine day-to-day tests that take a lot of time when performed manually. Test automation helps to reduce the time spent on such activities.

- Reduce the risk of human error – Autotests allow you to reduce the risk of human errors when performing complex sequences of actions, like building and deploying scripts.

- Simplify regression testing – Often, changes and updates to the application under test require running a wide range of regression tests. Automating such tests speeds up the overall testing process and allows testers to pay more attention to unusual cases.

- Run tests that can’t be performed by human testers – Some tests require a high level of calculation accuracy and have complex logic that can’t be ensured by a human tester.

On the other hand, autotests are also associated with a number of drawbacks:

- Instability – Autotests can bring false positives for various reasons: the failure of a particular component, problems with the environment, changes in the interface, flawed logic, and so on.

- Continuous support – Autotests require continuous support. If the functionality of the application under test changes too often, refactoring the existing tests may take even longer than writing new ones from scratch.

- Inability to automate – Some tests, like usability and design tests, are just impossible to automate.

- Expertise requirements – Autotests can’t be implemented successfully without the involvement of highly qualified specialists. Unfortunately, this may incur additional costs for hiring such professionals or educating existing testers.

Still, test automation on large projects seems to be a rational decision. For our project in particular, the advantages surely outweigh the disadvantages. Let’s dig deep into the process of choosing, implementing, and supporting autotests for our project. But first, we need to take a closer look at the system we’re dealing with.

Brief overview of our project

Let’s start with a detailed description of the system to which we introduced our autotests. This knowledge will help you better understand the tasks we had and the challenges we faced.

Our goal was to build an enterprise-level monitoring solution that does three key things:

- Monitors client machines

- Detects malware

- Gathers data for further analysis

Monitoring is the main functionality of our client’s desktop application. It enables multiple types of monitoring operations for:

- Processes

- Network activities

- File activities

- Registry activities

- System logins

It’s also able to search through the file system and registry and take periodic snapshots of the system state, including data about running processes, services, drivers, and open handlers. Finally, it can acquire different objects: files, physical/logical drivers, physical memory, and so on.

From the architectural point of view, this product is a multi-component client-server application.

Test tasks and requirements

When working on this enterprise-level monitoring application, we used the Trunk-Based Development branching model, so we had a large number of branches, or trunks. Since the system consists of multiple components, preparing the environment and installing and checking basic cases for manual testing required a lot of time.

Our main tasks for running autotests were:

- Preparing the environment

- Automatically deploying the tested build

- Checking the basic test scenarios

After covering the basic scenarios, we planned to expand the sets of functional tests and build suites for regression testing. This way, we could save time on manual testing and avoid monotonous regression tasks.

Test requirements

We have a general set of best practices for implementing test automation in our projects. Based on these standards, we distinguished the following requirements for autotests in this project:

- A test must contain setup/teardown procedures for initializing preconditions and returning the environment to its initial state.

- Tests must successfully run in a dedicated, stable environment.

- Test data and any other hardcoded values should be moved to a separate file or configuration.

- Tests should run across platforms so we can run them in different environments without adjustments.

- Test results should be reflected in a general report on autotest performance.

Those were our basic requirements for automated tests. However, due to the specifics of the project, we also had to ensure that a number of special requirements were met:

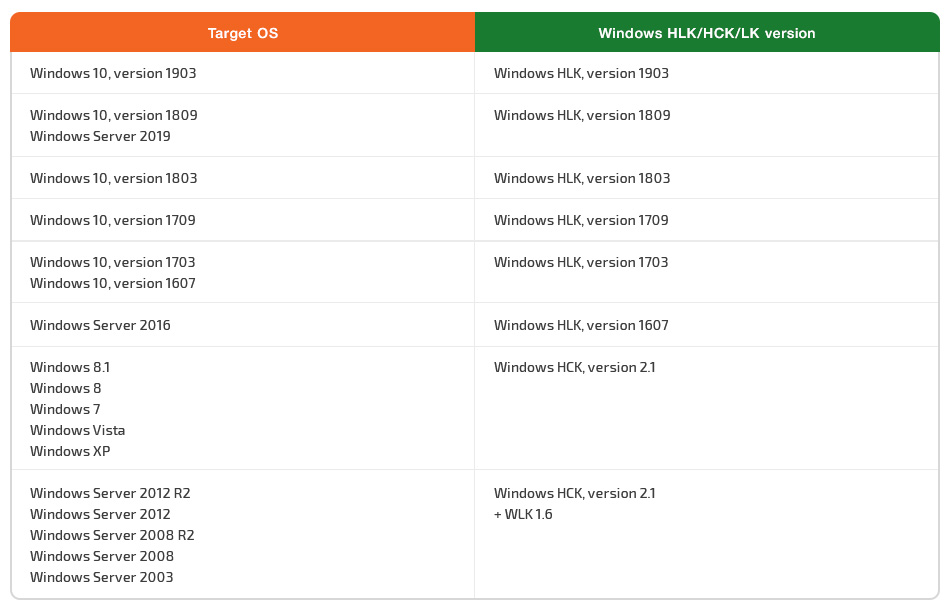

- Changeable operating systems – Our client’s application targets multiple operating systems across three large families: Windows, Linux, and Mac. This is why it was important to ensure we could easily add a new operating system to the existing environment or quickly remove unsupported ones.

- New test suites built out of the general set of tests – QA specialists often need to collect specific sets of tests to ensure the proper functioning of the application. Therefore, a simple procedure was required for building new test suites out of the existing tests.

- Test results are easy to interpret – Due to the specifics of the project, we needed to ensure that any member of the testing team could easily run automated tests and unambiguously interpret their results.

With all these requirements in mind, we built a framework that was later used for automating tests in this project. In the next section, we describe the contents of our autotesting framework and provide a brief overview for each of its components.

Technologies that formed our framework

The framework we describe below was mostly built based on the tasks and requirements for autotests in this project. Aside from these requirements, we also considered the overall experience of our testing team and the scope of technologies used.

As a result, we ended up with the following framework:

Let’s look closer at each component of this framework.

Jenkins for continuous integration. At first, we implemented autotests with Bamboo by Atlassian. However, Bamboo offers rather limited functionality, so we switched to Jenkins. Jenkins is widely used both for automation in general and as an element of build systems in particular. Jenkins is the core of the autotesting process. It allows us to manage the environment, build tests, deploy tested builds to the environment, and generate test results. The main advantages of Jenkins are its configuration flexibility, large base of extensions, and that it’s free, of course. We didn’t encounter any critical issues when implementing autotests with Jenkins.

Oracle VirtualBox Manager. Since we use VirtualBox as the main hypervisor, it was natural to choose a native tool for managing our virtual machines. Stability and simplicity are the main advantages of VirtualBox Manager.

C# (.NET). We decided that all tests should be written in C# (.NET) because a significant part of the application’s GUI is also written in this language. Plus, our testing team has lots of experience working with C#, so we wouldn’t need to spend time learning a new programming language. We used the Visual Studio integrated development environment (IDE) for writing our code.

TestStack.White. Our client’s desktop application would be under development for a while and uses a number of specific controls, like DevExpressand TreeView. It was a bit difficult to find a library that could recognize such control types. After testing several options, we settled on the TestStack.White framework. The key advantages of this framework are its stable performance and ability to determine and interact with all of the controls we needed.

Visual Studio Unit Testing Framework. This unit testing framework is integrated into Visual Studio, starting from Visual Studio 2005. It’s defined in Microsoft.VisualStudio.QualityTools.UnitTestFramework.dll. Since our team has specialists who have worked with this framework before, we decided to use it to save time at the initial stage of autotest implementation.

WMI and SSH for communication with remote clients. WMI and SSH are standard remote machine communication protocols for Windows and Unix systems respectively. They’re an obvious choice for cases when there’s a need to work with client machines.

VSTest.Console.exe. Since we used Visual Studio as an IDE for writing the code, we also used its native command-line interface (CLI) tool, VSTest.Console.exe, for running tests. Aside from easy integration with Visual Studio, it has a console utility for running autotests in continuous integration.

You can use the description of our framework as a guide for composing your own autotesting framework. Look for tools that best fit the requirements of your project.

Now that we’ve described all the tools and solutions for test automation, it’s time to move to the basics of the testing process.

Starting the testing process

Before running any tests, we’ll need to define three key parameters:

- The build we’ll run our tests on

- The configuration in which we’ll run the tests (We need to support both Windows and Linux, so we’ll use different databases and support two operation modes.)

- The test suite that will be executed (We need to choose the level of testing detail and the features we want to check.)

Once these parameters are defined, the whole testing process can be accomplished in the six steps illustrated below.

- Prepare a test library. At this step, we compile the code of the tests that will be executed with Jenkins and Visual Studio.

- Copy installers of the tested components to the environment. Since we’re automating the testing of a desktop application, we need to install it before running any tests.

- Prepare target virtual machines (Vms). VMs that will be used for testing purposes are rolled back to clean snapshots. Then a new system login is created.

- Deploy main components and agents.We install and configure the main kernel of the system and install agents locally on each machine.

- Test selected features according to our test suite. Using autotests, we interact with the system and validate the system responses to our actions. This is the most important part of the whole testing process.

- Form a report with test results. Once all the tests are finished, a report with test results is generated. These results can then be used for analysis and to improve the application’s performance and functionality.

When it comes to test automation, the biggest question is which tests to automate first. Let’s look closer at the process of selecting tests for automation.

Selecting tests for automation

With our project, the application under test will be under development for a long time and has extensive functionality. At the time of writing, the number of tests in TestRail is already around 5,500. This is why selecting tests for automation is a pressing issue.

Our acceptance test suite includes 300 tests. Our first task was to prioritize all of them.

There are many test prioritization approaches. These are the four most common:

- Based on the customer’s priorities

- Based on implementation complexity

- Based on the need for manual testing

- Based on test complexity

With the first approach, we start automating tests for features and functionalities that the customer considers the most important. Usually, these are the features that will cause the most damage if they have unfixed bugs in them.

The complexity of test implementation is also a great marker for selecting tests to automate first. Begin with the tests that are easiest to automate so you can start benefiting from test automation while putting minimal effort into it.

If your project requires extensive use of manual tests, you can start by automating the ones that you usually don’t perform during manual testing. This way, you can make sure that all test cases are covered.

Finally, consider starting your test automation with tests that are challenging to run manually. For instance, it’s surely difficult to manually test APIs, complex calculations, and comparison of large quantities of data. These tests are better left for machines.

For our monitoring application, we combined the first two approaches. First, we sorted all tests according to two parameters: case priority and automation complexity.

Tests with a high priority and low automation complexity were automated first. This way, in a short period of time, we managed to create a useful test suite for displaying the actual system state. We received the first benefits of test automation in practically no time and with minimal effort.

Then, we moved to tests that were more difficult to automate. At this point, we had already saved some time thanks to the previously automated tests, so we had enough resources to create and debug complex tests.

In the end, we created an acceptance test suite that we could run to get accurate information about the current state of the build.

The next stage was forming a test suite for running regression tests of various application features. Our prioritization approach was the same: first we automated the tests that had high priority and low automation complexity, then those that were less critical or more complex.

In the next section, we finally move to the process of implementing autotests and talk about the main stages of autotesting.

Read also:

Using Kali Linux for Penetration Testing

Implementing autotests

Implementing automated tests is a complex process. In our case, it consisted of the following ten steps:

- Setting autotest goals

- Defining requirements for autotests

- Defining framework components

- Selecting and prioritizing tests

- Preparing the test environment

- Configuring the CI component

- Auto-deployment of the tested build to the environment

- Implementing tests

- Documenting tests

- Configuring reports

Once we had determined our requirements for the tests, framework components, and test suites, we were ready to start actually implementing the tests.

Since a proper environment is the key to getting the most out of autotests, it’s important to pay special attention to this part. For instance, in our project, we dedicated several powerful computers for running autotests so that we could launch up to ten virtual machines simultaneously.

Once all the resources needed for implementing autotests were ready, we could move to configuring the Jenkins CI system. We performed the initial configuration and installed the slave agency on all client machines involved in the testing.

The next step was the deployment of the tested build. Since the main development is carried out in branches, we couldn’t implement full-fledged CI. This is why we deployed the tested build during test execution and not right after it was assembled by the build system.

After we finished the deployment process, we could start writing tests. Just like with standard development, we ran implementation, testing, and bug fixing cycles iteratively until we achieved stable tests.

Also similar to the standard development process, once we finished writing the test scope, we moved to the documentation stage. At this point, we needed to describe the tested environment, architecture, number of checks, and specifics of autotest implementation. It’s noteworthy that we had two types of documentation:

- Technical documentation for automatization specialists

- General documentation for other members of the testing team

The first set of documentation contains more technical details on test implementation, while the second describes the same tests from the product point of view.

The final stage is configuring reports: we determined what report formats to use, who would receive different types of reports, and so on.

Supporting autotests

Once autotests are implemented, the next task is to think about how to ensure their continuous support. While our tests are based on a GUI, they’re quite stable, mostly because the interface of the application under test doesn’t change that often.

Still, there are a number of things we need to do to support autotests and the environment they’re running in:

- Keep existing virtual machines up to date

- Add new versions of operating systems and exclude old ones from the testing environment

- Fix tests that stop working due to changes in the GUI

- Refactor tests to improve performance and increase stability

It’s also noteworthy that our case is rather an exception. Most GUI tests are unstable due to the rapidly changing interface as well as issues with the recognition of controls and GUI elements and interactions with them.

In general, if you want to implement stable autotests, here are three recommendations:

- Make sure that most of your tests are API tests

- Try avoiding GUI tests if possible

- For interacting with the GUI, choose the library that best fits your product’s interface

By following these recommendations, you can significantly improve the stability of your autotests.

Conclusion

Autotesting is a great way to improve overall product testing, get more accurate test results, and save time that you would have otherwise spent on manual tests. In particular, test automation for enterprises can save a lot of time (and thus money) by automating regression tests.

In this article, we took a detailed look at the process of selecting, implementing, and supporting automated tests using the example of one of our current projects. When we implemented autotests in our client’s enterprise desktop application, we saw a number of significant benefits:

- With the help of autotests, we could get actual information about the state of the tested build before it even made it to manual testers.

- We found (and were able to fix) a few dozen bugs before the build was tested by manual testers, saving a lot of time.

- We excluded the human factor from regular tests like checking file signatures after the build process.

In the end, autotests became an essential part of the testing process in this project, and we continue to benefit from their implementation. In the future, we plan to expand the scope of autotests for current features and continue automating tests as new functionalities are introduced to our client’s application.

At Apriorit, we have a team of skilled QA specialists who will gladly help you make your product even better and get the most out of test automation. Get in touch with us to start discussing your project.