When it comes to artificial intelligence (AI) projects, quality assurance is essential. However, there’s a difference between testing in AI projects and in other software projects.

Unlike traditional software, AI systems are continuously learning and evolving. Therefore, they have to continually be managed and tested. To ensure proper performance, your testing strategy may differ from the one you usually apply to non-AI systems.

In this article, we reveal some key points of testing AI and machine learning (ML) systems. You should keep these points in mind when planning testing activities and test cases. This information will be useful for QA engineers who want to effectively test AI solutions from the very beginning of development.

Artificial Intelligence: definition, related terms, and types

Testing AI systems requires QA engineers to understand what artificial intelligence is and how it works. Let’s start by defining some basic terms.

Artificial intelligence, or AI, enables machines to perform functions associated with the human mind, such as:

- Learning

- Planning

- Image/audio/video recognition

- Problem solving

AI-driven systems include software, applications, tools, and other computer programs.

AI systems learn and gain experience with the help of machine learning, or ML — a branch of AI that’s concentrated on data analysis. The results of this analysis are used to automate the development of analytical models. In other words, ML allows machines to learn.

Another essential AI-related term is deep learning (DL), a subfield of ML concerned with processing data and identifying patterns for use in decision-making. Deep learning is based on artificial neural networks — sets of algorithms designed to recognize patterns and learn to perform tasks based on examples, without being programmed with task-specific rules.

Looking to implement AI in your project?

Leverage Apriorit’s deep expertise in AI and machine learning to develop smart, scalable solutions tailored to your business needs.

AI implementation types

For testing AI solutions, it’s essential to understand the way AI works and to know the basic classification of AI systems.

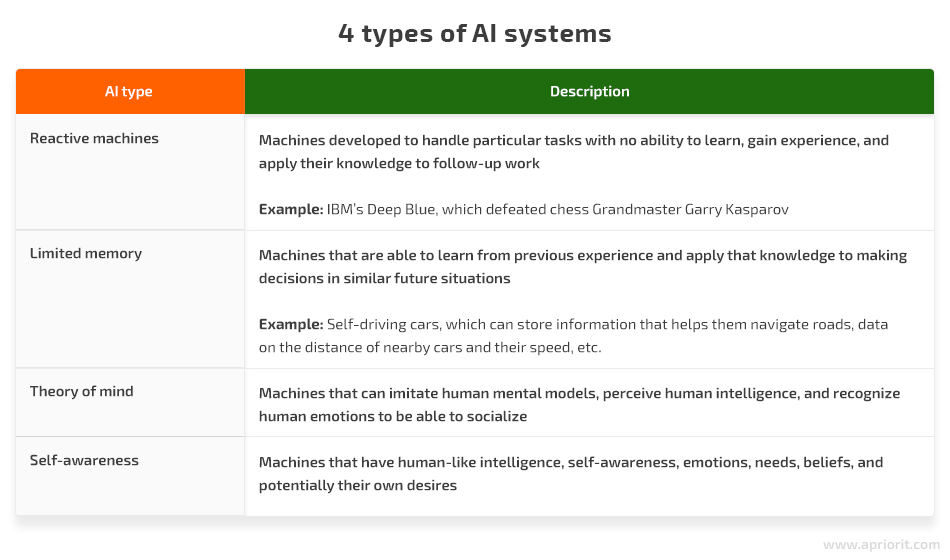

While there are many ways to classify AI systems, we can determine four categories depending on how advanced they are. There are two existing types of AI systems: reactive machines, such as those applied in IBM’s Deep Blue, and limited memory machines, such as those used in self-driving cars.

Two more approaches expected to be developed in the future are the theory of mind and self-awareness, though they’re still considered science fiction by skeptics. Moreover, the issue of artificial consciousness belongs to the fields of both philosophy and technology.

Now that we’ve defined the term AI and discussed its types, let’s move further and learn what we should pay attention to before testing an AI system.

What should you know before testing an AI system?

Despite various myths that AI can be 100% objective and work like the human brain, AI systems still can go wrong. Therefore, thorough testing is required.

AI systems have to be tested at each stage of development. All the usual approaches that QA engineers take to testing at different development phases are applied here. For instance, you need to analyze requirements to check for inaccuracies, achievability, and executability. Also, you have to develop a testing strategy according to the system’s architecture.

The first thing to do is define whether you’re testing an application that consumes AI-based outputs or is an actual machine learning system.

Applications that consume AI-based outputs usually don’t require a special approach to testing. You can apply black-box testing techniques, just like when testing regular deterministic systems. Focus on checking whether the application behaves correctly when presented with an output from the AI.

However, If you’re faced with machine learning systems, your testing strategy will be more complicated. While standard testing principles are applicable to AI testing, you can also use white-box testing techniques. Additionally, a QA specialist should have:

- experience working with AI algorithms

- knowledge of programming languages

- the ability to correctly pick data for testing

Make sure to get all information from developers about datasets used to train the network. Usually, this data is divided into three categories of datasets:

- Training dataset — data used to train the AI model

- Development dataset — also called a validation dataset, this is used by developers to check the system’s performance once it learns from the training dataset

- Testing dataset — used to evaluate the system’s performance

Knowing how these datasets play together to train a neural network will help you understand how to test an AI application.

Read also

Developing an AI-based Learning Management System: Benefits, Limitations, and Best Practices to Follow

Explore how AI enhances learning management systems. Our experts share the key benefits of AI-driven LMS solutions and tips to improve students’ experience and boost engagement.

Key challenges in testing AI systems

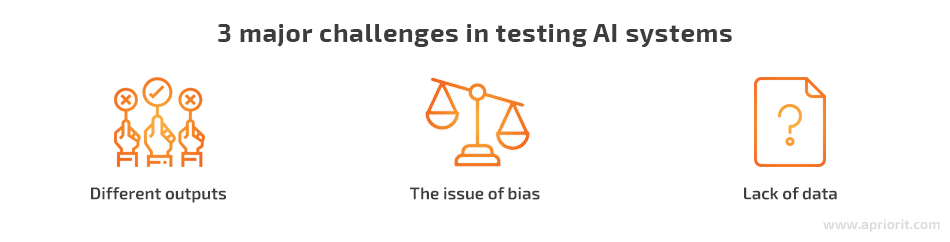

AI systems may be used for various purposes, have different architectures, and offer unique challenges to QA engineers. However, when testing any AI system, you’ll meet three major challenges.

1. Different outputs

The fact that a learning system will change its behavior over time brings some challenges.

For instance, implementing a test oracle in AI algorithms is impossible without human intervention. An oracle refers to a mechanism or another program used to determine whether a system is working correctly. However, if you take image recognition, for example, you need humans to check whether images are labeled correctly.

Testers have to check certain boundaries within which the output must fall. Predicting the output of AI systems may be an issue because they’re constantly learning, training, analyzing mistakes, and adjusting themselves to new information.

Thus, today’s output may be different from tomorrow’s. To ensure that a system stays within defined boundaries, it’s essential to look at the input: by limiting the input, we can influence the output.

2. The issue of bias

Some myths claim that AI is free of bias, which unfortunately isn’t true.

Training datasets are provided by humans. Therefore, there’s always a risk that AI solutions will be biased. There are several ways to deal with the problem of bias in AI. For instance, you can deploy ML algorithm evaluation tools to check a system for possible unfairness.

Also, both developers and QA engineers have to think about using diversified datasets when training and testing an AI system. However, a lack of data poses its own challenges.

3. Lack of data

AI systems may be used to handle different tasks: analyzing users’ preferences to offer them similar products, processing images, videos, or voice records, etc. Data required for learning may be very specific and complicated to get.

Also, the performance of some AI systems can worsen as the accuracy of the output declines with time. This situation can be caused by changeable user behavior or dynamically changing data. One way to solve this issue is to regularly retrain the network with a new dataset, which can be costly or even impossible because of a lack of data.

If you work with images, you may use data augmentation techniques to get more training data. For example, you can use ImageDataGenerator. Sometimes, data can be gathered by the testing team from internet sources. However, this approach requires much time.

Read also

Challenges in AI Adoption and Key Strategies for Managing Risks

Learn how to prepare your project\company for AI adoption. Explore practical solutions to common AI implementation challenges and accelerate your organization’s AI journey with expert insights from Apriorit.

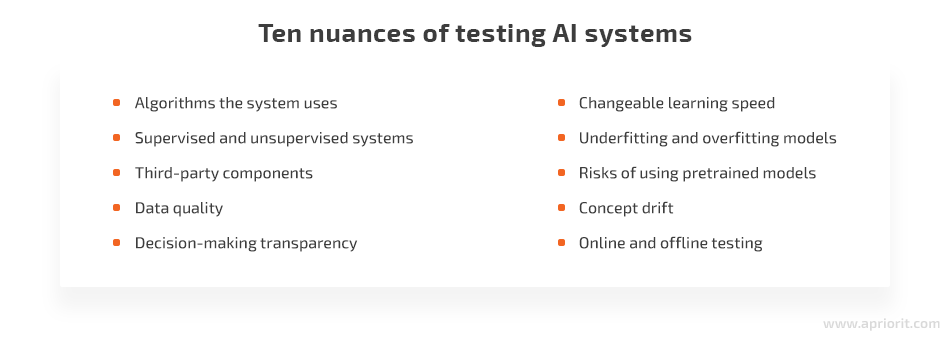

10 nuances of testing AI systems

Similar to any other type of testing, there’s no single correct approach that can be applied to test every AI system. When it comes to AI, attention should be paid to the type of system and the parameters it uses to make decisions.

First of all, you should start with considering the AI system’s characteristics and answering the following questions:

1. Which algorithms does the system use?

Before starting the testing process, it’s essential to know the type of AI algorithms used in a particular application and to pay attention to the general form of the system and the number of parameters used for decision-making.

For instance, in the case of a linear system, the number of parameters can be limited. However, if a vector-type system has too few parameters, it can have high entropy indicators and make a wide range of incorrect decisions.

Decision tree systems are classic binary trees that have yes or no decisions at each split until the model reaches the result node. Decision tree algorithms are used, for example, by the Sophia robot to chat with humans.

2. Is the system supervised?

When a supervised system has specific predefined parameters, there should be a check for algorithm errors (when developing a custom tool, not importing standard functions).

Since an unsupervised system searches for hidden connections on its own, an error can occur when preparing the training data. Bad data in, bad data out: if the AI system receives inaccurate, incorrect, duplicate, or incomplete data for learning, it won’t provide correct results when performing tasks.

3. What third-party components does the system use?

The libraries and modules your AI system uses are usually developed by other teams, which leads to a risk that they’ll have their own bugs. Therefore, you should monitor bug tracking software when working with third-party tools. Also, it can be dangerous to implement a module into a system without validating the input data from third-party components.

Third-party components may also cause security issues. However, there’s no way to eliminate this problem completely. The only way to minimize the risk is to use only trusted third-party resources.

4. What data does the system use?

Another issue is that the data for learning can contain information of poor quality — for example, damaged images.

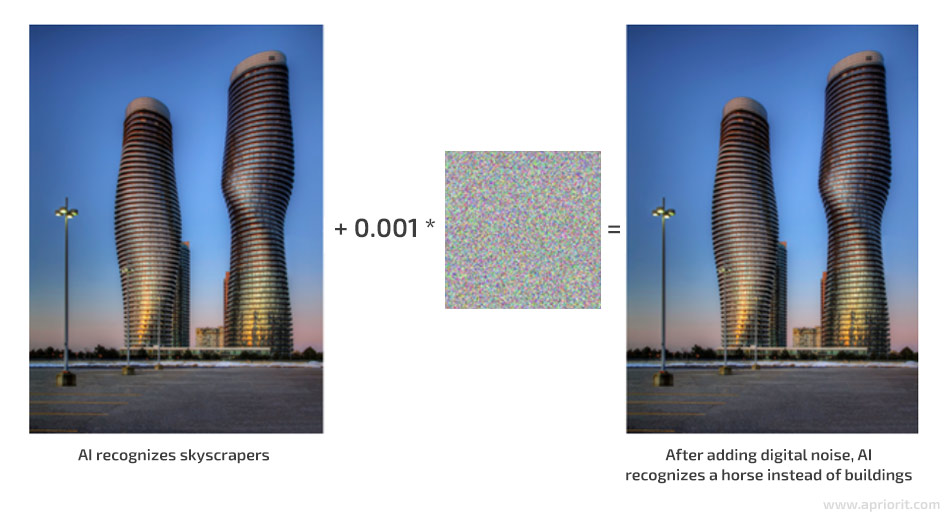

If you provide an image recognition system with a skyscraper picture first and later give it an image of digital noise, the system most likely won’t recognize the skyscraper again because of the influence of the digital noise image.

5. Is the decision-making process transparent?

To perform comprehensive testing, it’s essential for an AI system to provide clear reasons for each decision it makes. This will help with diagnosing any issues that may occur and allow for root cause analysis, which is one of the major techniques for locating bugs.

The issue of decision-making transparency has to be discussed with the team before development starts so that both developers and QA engineers can understand this problem and ask questions to prevent misunderstandings.

Read also

Techniques for Estimating the Time Required for Software Testing

Struggling with allocating enough time for QA? Explore proven techniques for efficient estimation of testing activities in software projects to plan your project realistically and stay within budget.

6. Have you thought about changeable learning speed?

When training an AI, issues may occur for an unknown reason. For instance, the input data most likely will be processed with non-linear speed because the AI learning process itself is non-linear. This speed can periodically change, affecting the estimated learning time.

7. Is the ML model underfitting or overfitting?

Overfitting refers to when a system handles the training data too well. This happens when an ML model becomes too attuned to the data used for its training and loses its applicability to any other dataset. For example, overfitted systems learn details and digital noise in the training data to the extent that it harms the performance of the model on new data.

Underfitting refers to when a system fails to sufficiently capture the underlying structure of the data because it misses some key parameters. For instance, underfitting can occur when providing a linear model with non-linear data. Such models will show poor performance on a training dataset.

8. Does your team use a pretrained model?

Many AI developers use pretrained ML models for their new projects. A pretrained model was trained on an extensive dataset or even on several datasets to solve a problem similar to the one you’re trying to solve.

This solution obviously saves lots of time and effort. However, it has some downsides.

If you use a pretrained model, there’s always a risk of some misconfigurations or bugs that can be found later. And if you give third parties your own pretrained model and allow them to run it locally, it may lead to security issues for your end application. For instance, attackers can inspect its underlying code and properties to break your system and get access to personal data.

9. Are you aware of the challenge of concept drift?

One of the reasons why your AI system’s performance can degrade is that data changes over time. What’s true today can be wrong in a year. Therefore, it’s essential to track how old the data is that’s used for the system’s training and to use updated datasets to prevent concept drift.

Concept drift in AI and ML refers to a change in the relationships between input and output data in the underlying context over time. Predictions become less accurate as time passes.

10. Do you consider online and offline testing?

It’s essential to test AI systems in production to see how they handle new data, even if offline testing has shown good results.

A study by researchers from the University of Luxembourg and Ottawa University investigated combining online and offline methods for testing autonomous driving systems. Offline testing of AI systems is less expensive and faster than online testing. However, it’s not as effective at finding significant errors. While the results are similar, offline testing tends to be more optimistic. The reason is that online testing predicts more safety violations that aren’t suggested by offline testing.

Related project

Leveraging NLP in Medicine with a Custom Model for Analyzing Scientific Reports

Explore how NLP transformed medical data analysis and helped the client’s system reach over 92.8% accuracy. Find out how our team created an NLP solution that improved data processing for our client from the healthcare industry.

Conclusion

We hope this article has helped you grasp how to test AI applications. Hopefully, our tips will help you form a mind map that you can use to cover the most critical parts of your system with tests. Thus, you can reduce the number of errors in your AI system and minimize the amount of time and resources spent fixing them.

If you’re considering creating an AI solution, our experienced development team will be happy to help you expand the boundaries of your project with AI and ML technologies.

Need advanced AI solutions?

Unlock the potential of artificial intelligence with Apriorit’s development skills designed to optimize performance and drive growth.