Testing your software’s performance can be time-consuming. Constantly checking and fixing failed tests or bugs takes a lot of time and requires a lot of effort on the part of quality assurance (QA) teams, especially when they do it manually.

To save time and still deliver quality results, many projects aim to automate their testing efforts. At Apriorit, we are careful in developing automated testing strategies and use TestRail for managing automation test results.

This article will help you understand how to improve the efficiency of your QA processes with the help of TestRail by sharing our strategies when using this tool.

Contents:

Why should you manage automation testing results?

To ensure effective automation testing, you need to devise a test management strategy and choose appropriate technologies and test coverage for your project. This will provide you with transparency into autotest results, efficient analysis and management of those results, and a strategy for dealing with fragile tests, which are the test that work most of the time.

One of the most common problems when implementing test automation is a lack of attention to the management of autotest results. Managing the results of automation tests is an integral part of building an efficient testing strategy. It can help you significantly shorten the repetitive manual testing process and optimize your QA expenses in the long run.

The worst-case scenario is when you run tests locally in the automated quality assurance (AQA) environment and they are not integrated into the continuous integration and continuous deployment (CI/CD) pipeline. In this case, you have no control over either the regular running of autotests or their results. As time passes, or after changing your AQA environment, autotests may never be run again and their previous results may get lost. Consequently, the money and other resources dedicated to writing and running these tests may prove to have been spent in vain.

In more mature processes, QA engineers and developers integrate autotests into the CI/CD pipeline. QA specialists regularly run tests in test environments, and results are compiled in one report. Usually, the automation testing team works independently from the manual testing team. Therefore, manual tests and their results are monitored separately from autotests. There are some negative consequences to this:

- You need to put effort into managing test results in more than one place

- There’s a risk of not responding to failed autotests in time

Sometimes, manual and automated QA specialists have blurred responsibilities for monitoring the results of autotests, which might lead to test results not being processed or even being lost. Also, the unclear responsibilities of manual and automated QA specialists complicate the process of calculating autotest coverage, which is one of the most important metrics for managing test automation.

Now, let’s take a look at different situations when you can integrate test automation tools into your testing process.

Need assistance with automating your QA efforts?

Level up your software quality with effective and well-organized testing activities. Leverage Apriorit’s expertise in quality assurance to deliver an efficient project within established deadlines.

What can you achieve with test automation tools?

There are many things you can oversee by integrating autotest management tools into your QA department’s work.

Automation test scenarios. Autotest management tools provide you with a convenient way of storing autotest scenarios for future use. For example, if you’re using behavior-driven development or a keyword-based test automation approach, you can retrieve automation test scenarios from storage for every test run.

Test execution process. During the execution of test cases, autotest management tools provide many options for combining tests: sequential execution, prioritization, parallel execution, and others. Based on the requirements, resources, and objectives of the testing project, you can select the best option and integrate it into your autotest management tool.

Test execution results. Many test management tools allow you to generate reports after a test run. These reports have a lot of visual components, including execution outcomes, failed tests and reasons for their failure, and total execution time.

Test execution history. All of your test run results are stored and can be retrieved at any moment. You can also generate statistics on all executions with different parameters depending on the functionality of the selected tool.

To optimize the monitoring and management of autotest results, the Apriorit team an integrated approach, combining manual and automated testing activities. As part of this approach, we:

- ensure that QA specialists responsible for manual and automated testing work in constant contact as part of a single team

- use a single tool for managing both manual tests and autotests

There are several popular tools for autotest result management, including TestRail, Zephyr, QMetry, and Testmo. Based on our experience, we prefer using TestRail, as it provides the following benefits:

- Jira testing integration. We use Jira as our main progress-tracking software, so using TestRail for test automation together with Jira is very convenient for our team.

- Ability to manage both manual and automated tests. This improves the productivity of our QA team, allowing them to respond to failed tests in a timely manner.

- Test cases unification. This allows us to use test cases in one single format that suits us and automatically export test cases from TestRail in the same format. After we import existing test cases or create new ones, TestRail will apply the selected template to all available test cases.

- User-friendly UI. TestRail is not only visually appealing but also intuitive to use.

Now let’s take a look at our strategy for managing automation testing results in TestRail.

Read also

Shift-left Testing: Benefits, Approaches, Adoption Best Practices

Detect bugs early, save on error fixing, and accelerate time to market by starting QA activities early in the development lifecycle. Apriorit QA experts share all the details you need to know about shift-left testing.

How to process automation testing results in TestRail

At Apriorit, we have developed our own effective QA strategy based on the shift-left approach.

Benefits of this strategy include:

- time and cost savings on QA activities

- faster time to market

- improved user experience

- satisfactory product performance

As part of our effective automation strategy, we build autotest coverage at the early test stages before strategy implementation. After finishing the test design stage, we analyze which of the designed test cases should be covered with autotests. The chosen tests are marked in TestRail as Automated in the Type field.

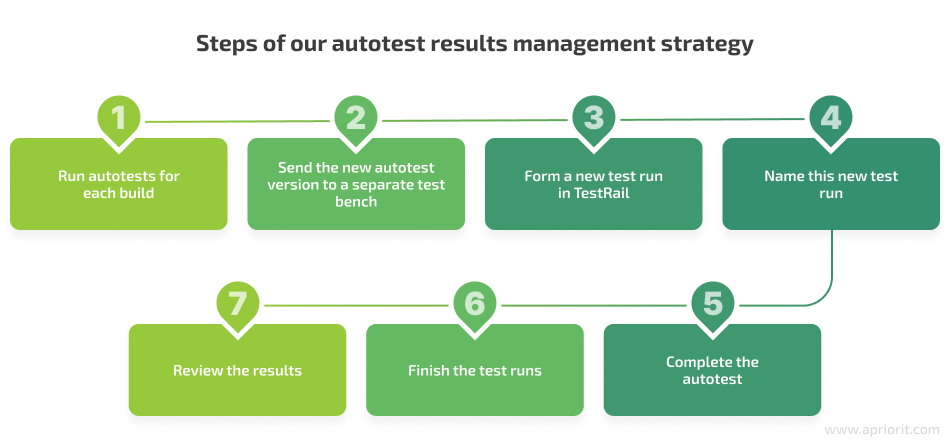

Specifying test case types allows us to have a corresponding test case for all autotests during TestRail automated testing. With this approach, the process of running autotests and monitoring their results becomes controllable and transparent. Since we have a corresponding test case for each autotest and we know how to create test runs automatically in TestRail, we can finalize our autotest results management strategy as follows:

- First, we run autotests as part of the CI/CD pipeline for each build.

- After a successful build, we send the new autotest version to a separate test bench for running autotests.

- With each run, we form a new test run in TestRail, adding all tests of the Automated type.

- After forming a new test run, we name it according to a template so we can easily understand the version and time of the test run. For example, we use the following template: Acceptance_Automation_Run_<build version number>_<timestamp>. We have now completed the autotest.

- The status of each test is automatically entered in a test run after completing the autotest – Passed, Failed, or Untested.

- We finish all test runs. After that, we get a notification with a link to a new test run.

- Finally, we review the results of passed tests and analyze tests with a Failed or Untested status.

When working with tests that have a Failed or Untested status, we also use a defined workflow:

We create bug tickets for all failed tests in the backtracking system. Our team always rechecks failed tests and includes information about the result of the manual check in the bug report.

We always put a high priority on fixing a bug or a failed test, even if it cannot be manually reproduced. We do this because an incorrectly working or broken test has the same priority for us as a bug in the finished product.

We also create bug tickets for tests with the Untested status. Once we’ve included the tests with an Automated type in the test run, they must be covered and should be performed regularly. If there is a test but our team hasn’t executed it yet, this is also a problem with the same priority as a bug in the product.

As a result, we have created a transparent system for monitoring and controlling the regularity of autotest launches and the results of autotests. The whole team, from AQA and manual QA specialists all the way to developers and management, can receive notifications about test launches and easily see the results of a test run.

Both AQA and manual QA specialists can review test results and bugs registered on failed tests. Everything is completely transparent and accessible to all participants of the test process. Now that we have explained our strategy for processing automation testing results in TestRail, it’s time for TestRail automation integration into your QA process.

Related project

Developing a Custom ICAP Server for Traffic Filtering and Analysis

Explore a success story of creating and testing an ICAP server for sanitizing files. As a result of successfully implementing the developed ICAP server into their existing product, the client managed to attract new customers and increase revenue.

How to integrate autotest results with TestRail (practical example)

If you want to integrate autotest results with TestRail, you can use ready-made plugins that correspond to the technologies you used when writing autotests. The approaches we show in the examples below are for Python tests and Cypress tests.

We recommend creating a new test run with each run of autotests because it’s more convenient than having a single test run. This way, you won’t lose any important information such as test execution dates and failed tests statistics. To create a new test run with each run of autotests, we implement a wrapper over TestRailAPI.

Let’s take a closer look at each of the used plugins.

Integrating Python tests

You can use the pytest-testrail plugin to integrate TestRail and automated tests into your QA process.

When you run Python test automation, you need to specify the reporter and the test run ID.

For example:

python -m pytest --testrail --tr-run-id=111

Autotests are marked as @pytestrail.case(*testrail_case_id*). After performing a test, the corresponding test status gets added to the test run.

During test run execution, we use data from the testrail.cfg file, which contains the following parameters: URL, credentials, project_id, suite_id, etc.

Here is what a configuration file may look like for a Python test:

[API]

url = https://yoururl.testrail.net/

email = user@email.com

password = <api_key>

[TESTRUN]

assignedto_id = 1

project_id = 2

suite_id = 3

plan_id = 4

description = 'This is an example description'

[TESTCASE]

custom_comment = 'This is a custom comment'

Now let’s take a look at integrating Cypress tests into TestRail.

Integrating Cypress tests

You can use TestRail Reporter for Cypress to integrate Cypress tests into TestRail.

You need to include the ID of your test case in TestRail Cypress tests. When executing Cypress tests, you can get data from the cypress.json file automatically. This data includes the following parameters: reporter, testrail URL, credentials, project_id, suite_id, run_id, etc.

For example:

...

"reporter": "cypress-testrail-milestone-reporter",

"reporterOptions": {

"domain": "yourdomain.testrail.com",

"username": "username",

"password": "password",

"projectId": "projectIdNumber",

"milestoneId": "milestoneIdNumber",

"suiteId": "suiteIdNumber",

"createTestRun": "createTestRunFlag",

"runName": "testRunName",

"runId": "testRunIdNumber",

} For your convenience, you can generate a report with key metrics by yourself at the end of each test run. Next, we focus on these metrics and their meaning

Read also

Why Software Testing Metrics Matter and How to Choose the Most Relevant Metrics for Your Project

Take the most out of quality assurance activities to deliver a reliable and efficient product. Find out how to choose the most relevant testing metrics to receive quantitative information about the quality of your product and processes.

How to understand important metrics in TestRail

Using TestRail for your QA processes makes it easy to collect important metrics and track their dynamics in addition to providing for the transparency and controllability of autotests. After executing a test run, you can review the results and important information about the tests at any time in TestRail.

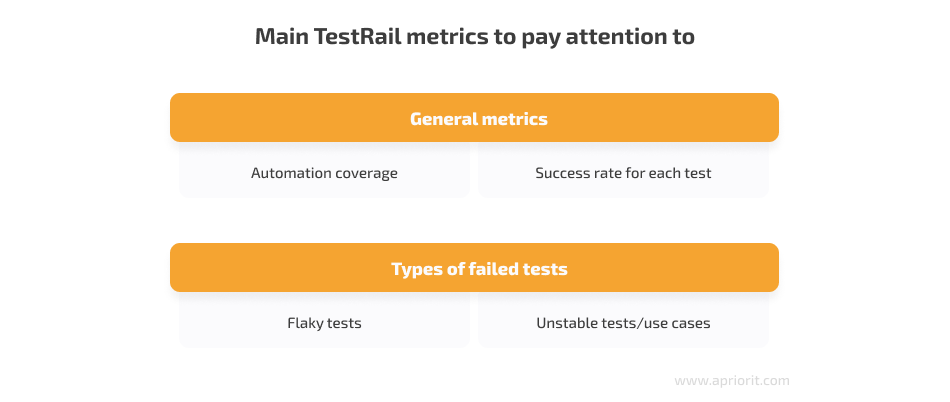

We recommend paying attention to two important metrics in a report:

1. Automation coverage is the percentage of test cases covered with autotests. When you use TestRail to manage automated test cases, they all have a separate type. You can easily calculate the percentage of acceptance test coverage as well as overall automation coverage.

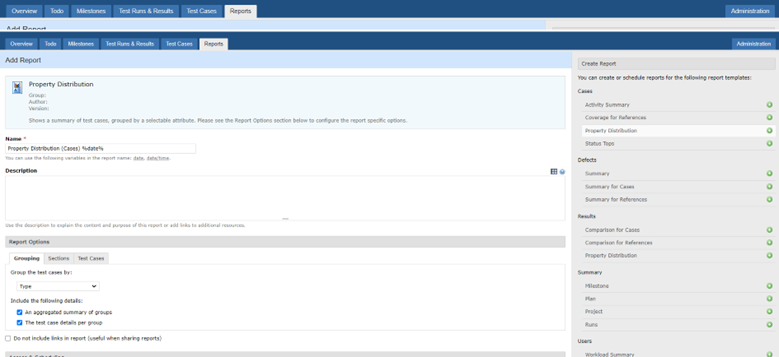

To learn the number and percentage of autotests, go to the Reports tab. Choose a Name and add a Description for your report. After that, select the Grouping tab in Report Options and group your test cases by Type.

It’s best to regularly generate a Property Distribution report to monitor metric dynamics, which will allow your team to control the build-up or scaling down of the autotest coverage.

2. The success rate for each test is the percentage of Passed/Failed statuses for a particular test. With the help of TestRail reports, you can track how many times a particular test has failed and focus on the tests that fail most often.

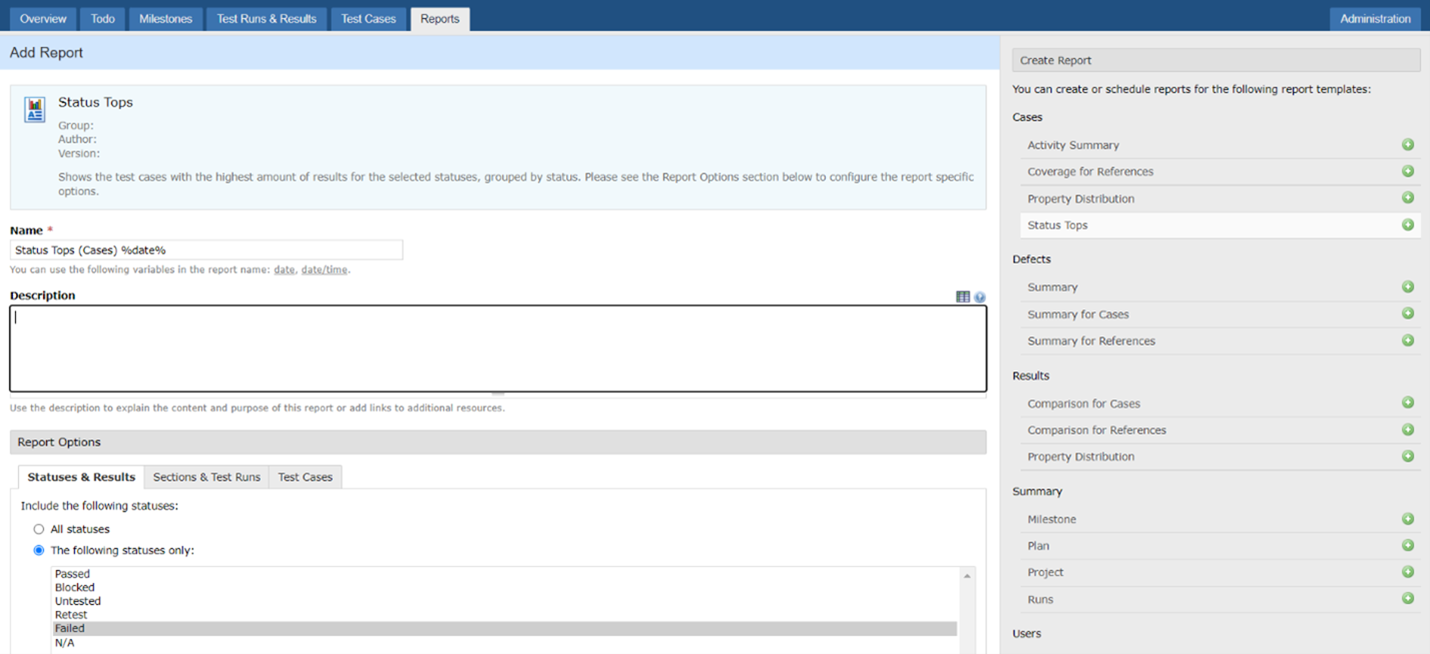

To analyze failed tests in TestRail, first go to the Reports tab. Enter a Name and add a Description for your report. After that, select the Failed status in Report Options.

After completing these actions, you will see the tests that failed most often.

While analyzing failed tests, you can divide them into two categories:

- Flaky tests (unreliable, fragile tests) — tests that are not stable and often fail, giving a false positive result for no apparent reason instead of finding actual bugs. We describe the strategy for working with such tests in detail in the next section.

- Unstable tests or use cases — tests or use cases that are broken or buggy. These tests indicate that you have fragile code in a module, and perhaps your QA team might need to refactor it.

You need to immediately address and try to fix any test that fails for no apparent reason so it doesn’t stall the development process and occupy your QA specialists’ time in the future. Let’s take a closer look at how to deal with these flaky tests.

Read also

Improving Code Quality with Static Code Analysis Using SonarQube: A Practical Guide

Control code quality from early development stages to quickly detect and fix bugs. Discover how continuous code quality assurance can benefit your project and how to use SonarQube for static code analysis.

How to manage flaky tests

Flaky tests require special attention from QA specialists who deal with autotests, analyze the results of each test run, and issue bug reports for each failed test. Flaky tests can require quite a lot of effort from QA experts, including time and resources spent on every test run, manual analysis of failed tests, and time spent fixing the tests.

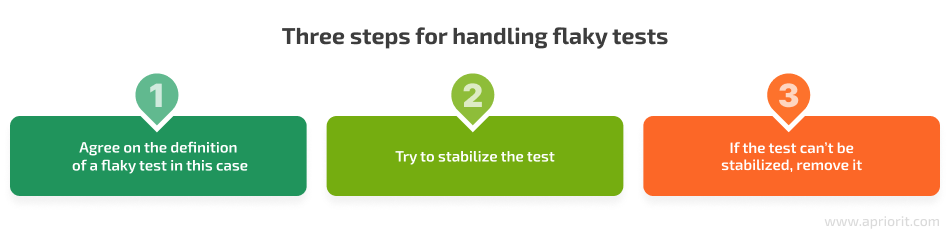

To decrease the time spent on unreliable tests, you need to explicitly describe your strategy for working with such tests within your autotest management strategy. At Apriorit, we have a clear strategy for dealing with flaky tests:

Step 1. First of all, we define a flaky test in this particular case. In our practice, we consider a test to be flaky or unreliable if it produces a false positive three times in a row.

Step 2. We try to stabilize the flaky test by fixing it.

Step 3. If the test still fails and gives a false positive result after three attempts to fix it, we might need to remove the autotest and change the type of test case in TestRail.

If a test is unreliable and you can’t stabilize it, it becomes a burden for your QA team. When a test is unreliable and you can’t trust its results, it’s best to fix it as soon as possible or delete it. This way, you won’t have to spend time supporting and monitoring the results of flaky tests and checking them out manually.

Now you have a clear plan for dealing with an unreliable test. As a result, you can be sure that your QA team won’t waste too much time and effort on such tests.

Conclusion

You can take our example as the basis for your QA process and adapt it according to your needs. Automation testing results management using TestRail allows you to significantly improve your quality assurance efforts. With the help of TestRail, your QA team can reduce the time and resources spent on test runs. You can also track your results in TestRail, thus managing your test runs and handling failed tests and bugs more effectively.

Apriorit QA experts have extensive expertise in software testing. We know how to choose the most relevant QA strategies and toolsets for a specific project and ensure the best software quality within set deadlines.

Ready to bring your project to the next level?

Improve the performance of your software with the help of Apriorit’s expert QA team!